This page shows you how to integrate Grafana with a and make insights based on visualization

of data optimized for size and speed in the .

This page shows you how to integrate Grafana with a and make insights based on visualization

of data optimized for size and speed in the .

Prerequisites

To follow the steps on this page:- Create a target with the Real-time analytics capability enabled. You need your connection details. This procedure also works for .

- Install and run self-managed Grafana, or sign up for Grafana Cloud.

Optimize time-series data in hypertables

s are tables in that automatically partition your time-series data by time. Time-series data represents the way a system, process, or behavior changes over time. s enable to work efficiently with time-series data. Each is made up of child tables called chunks. Each chunk is assigned a range of time, and only contains data from that range. When you run a query, identifies the correct chunk and runs the query on it, instead of going through the entire table. is the hybrid row-columnar storage engine in used by . Traditional databases force a trade-off between fast inserts (row-based storage) and efficient analytics (columnar storage). eliminates this trade-off, allowing real-time analytics without sacrificing transactional capabilities. dynamically stores data in the most efficient format for its lifecycle:- Row-based storage for recent data: the most recent chunk (and possibly more) is always stored in the , ensuring fast inserts, updates, and low-latency single record queries. Additionally, row-based storage is used as a writethrough for inserts and updates to columnar storage.

- Columnar storage for analytical performance: chunks are automatically compressed into the , optimizing storage efficiency and accelerating analytical queries.

-

Import time-series data into a

-

Unzip nyc_data.tar.gz to a

<local folder>. This test dataset contains historical data from New York’s yellow taxi network. To import up to 100GB of data directly from your current -based database, migrate with downtime using native tooling. To seamlessly import 100GB-10TB+ of data, use the live migration tooling supplied by . To add data from non- data sources, see Import and ingest data. -

In Terminal, navigate to

<local folder>and update the following string with your connection details to connect to your . -

Create an optimized for your time-series data:

-

Create a with enabled by default for your

time-series data using CREATE TABLE. For efficient queries

on data in the , remember to

segmentbythe column you will use most often to filter your data. In your sql client, run the following command:If you are self-hosting v2.19.3 and below, create a relational table, then convert it using create_hypertable. You then enable with a call to ALTER TABLE. -

Add another dimension to partition your more efficiently:

-

Create an index to support efficient queries by vendor, rate code, and passenger count:

-

Create a with enabled by default for your

time-series data using CREATE TABLE. For efficient queries

on data in the , remember to

-

Create tables for relational data:

-

Add a table to store the payment types data:

-

Add a table to store the rates data:

-

Add a table to store the payment types data:

-

Upload the dataset to your

-

Unzip nyc_data.tar.gz to a

-

Have a quick look at your data

You query s in exactly the same way as you would a relational table.

Use one of the following SQL editors to run a query and see the data you uploaded:

- Data mode: write queries, visualize data, and share your results in for all your s.

- SQL editor: write, fix, and organize SQL faster and more accurately in for a .

- psql: easily run queries on your s or deployment from Terminal.

-

Display the number of rides for each fare type:

This simple query runs in 3 seconds. You see something like:

rate_code num_trips 1 2266401 2 54832 3 4126 4 967 5 7193 6 17 99 42 -

To select all rides taken in the first week of January 2016, and return the total number of trips taken for each rate code:

On this large amount of data, this analytical query on data in the takes about 59 seconds. You see something like:

description num_trips group ride 17 JFK 54832 Nassau or Westchester 967 negotiated fare 7193 Newark 4126 standard rate 2266401

Optimize your data for real-time analytics

When converts a chunk to the , it automatically creates a different schema for your data. creates and uses custom indexes to incorporate thesegmentby and orderby parameters when

you write to and read from the .

To increase the speed of your analytical queries by a factor of 10 and reduce storage costs by up to 90%, convert data

to the :

- Connect to your In open an SQL editor. The in-Console editors display the query speed. You can also connect to your using psql.

-

Add a policy to convert chunks to the at a specific time interval

For example, convert data older than 8 days old to the :

See add_columnstore_policy. The data you imported for this tutorial is from 2016, it was already added to the by default. However, you get the idea. To see the space savings in action, follow Try the key features.

Connect Grafana to Tiger Cloud

To visualize the results of your queries, enable Grafana to read the data in your :-

Log in to Grafana

In your browser, log in to either:

- Self-hosted Grafana: at

http://localhost:3000/. The default credentials areadmin,admin. - Grafana Cloud: use the URL and credentials you set when you created your account.

- Self-hosted Grafana: at

-

Add your as a data source

-

Open

Connections>Data sources, then clickAdd new data source. -

Select

PostgreSQLfrom the list. -

Configure the connection:

-

Host URL,Database name,Username, andPasswordConfigure using your connection details.Host URLis in the format<host>:<port>. -

TLS/SSL Mode: selectrequire. -

PostgreSQL options: enableTimescaleDB. - Leave the default setting for all other fields.

-

-

Click

Save & test.

-

Open

Monitor performance over time

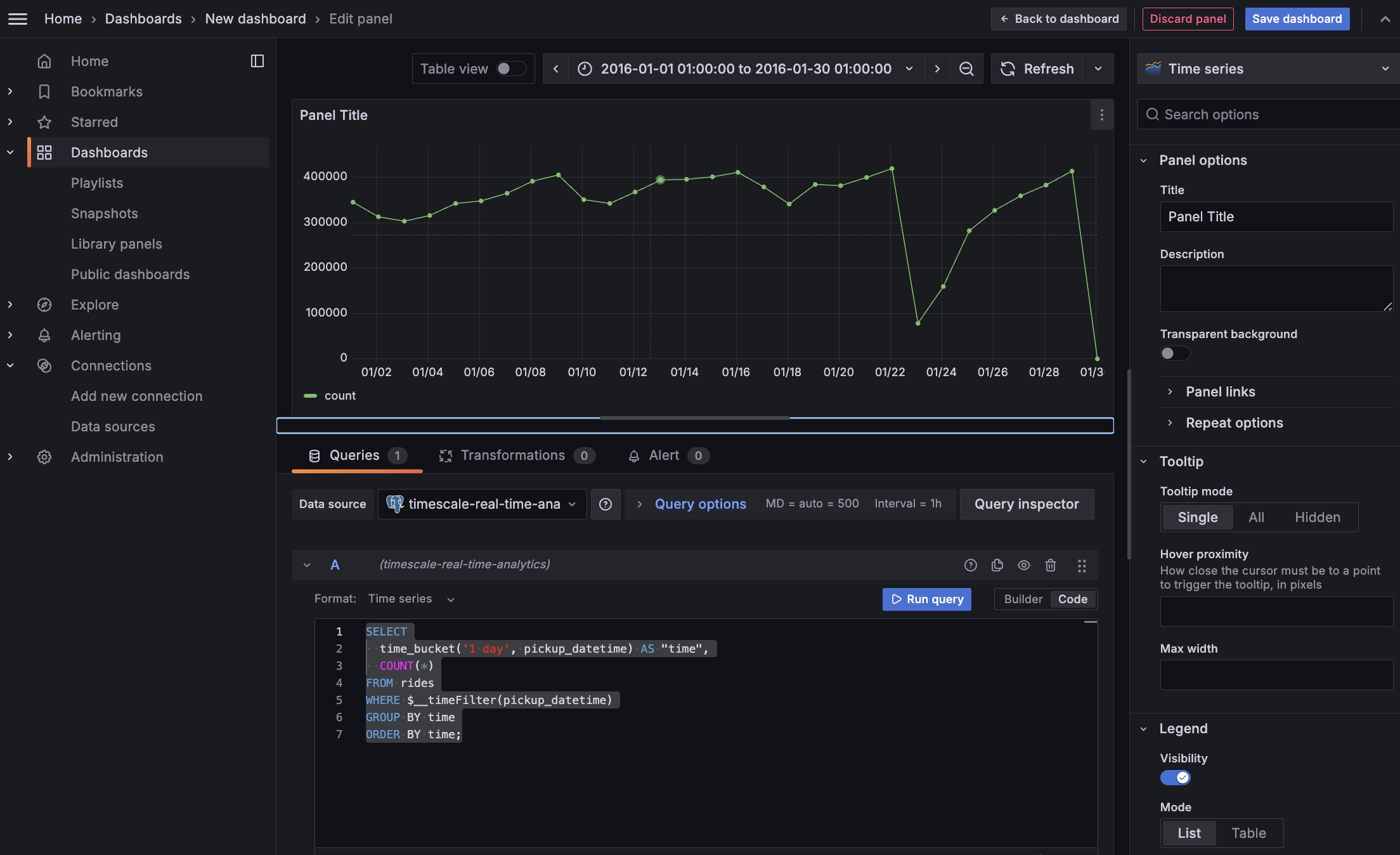

A Grafana dashboard represents a view into the performance of a system, and each dashboard consists of one or more panels, which represent information about a specific metric related to that system. To visually monitor the volume of taxi rides over time:-

Create the dashboard

-

On the

Dashboardspage, clickNewand selectNew dashboard. -

Click

Add visualization. -

Select the data source that connects to your .

The

Time seriesvisualization is chosen by default.

-

In the

Queriessection, selectCode, then selectTime seriesinFormat. -

Select the data range for your visualization:

the data set is from 2016. Click the date range above the panel and set:

- From:

2016-01-01 01:00:00 - To:

2016-01-30 01:00:00

- From:

-

On the

-

Combine and Grafana functionality to analyze your data

Combine a time_bucket, with the Grafana

$__timefilter()function to set thepickup_datetimecolumn as the filtering range for your visualizations.This query groups the results by day and orders them by time.

-

Click

Save dashboard

Optimize revenue potential

Having all this data is great but how do you use it? Monitoring data is useful to check what has happened, but how can you analyse this information to your advantage? This section explains how to create a visualization that shows how you can maximize potential revenue.Set up your data for geospatial queries

To add geospatial analysis to your ride count visualization, you need geospatial data to work out which trips originated where. As is compatible with all extensions, use PostGIS to slice data by time and location.-

Connect to your and add the PostGIS extension:

-

Add geometry columns for pick up and drop off locations:

-

Convert the latitude and longitude points into geometry coordinates that work with PostGIS:

This updates 10,906,860 rows of data on both columns, it takes a while. Coffee is your friend. You might run into this error while the update happens

Error: tuple decompression limit exceeded by operation Error Code: 53400 Details: current limit: 100000, tuples decompressed: 10906860 Hint: Consider increasing timescaledb.max_tuples_decompressed_per_dml_transaction or set to 0 (unlimited).To fix this, use

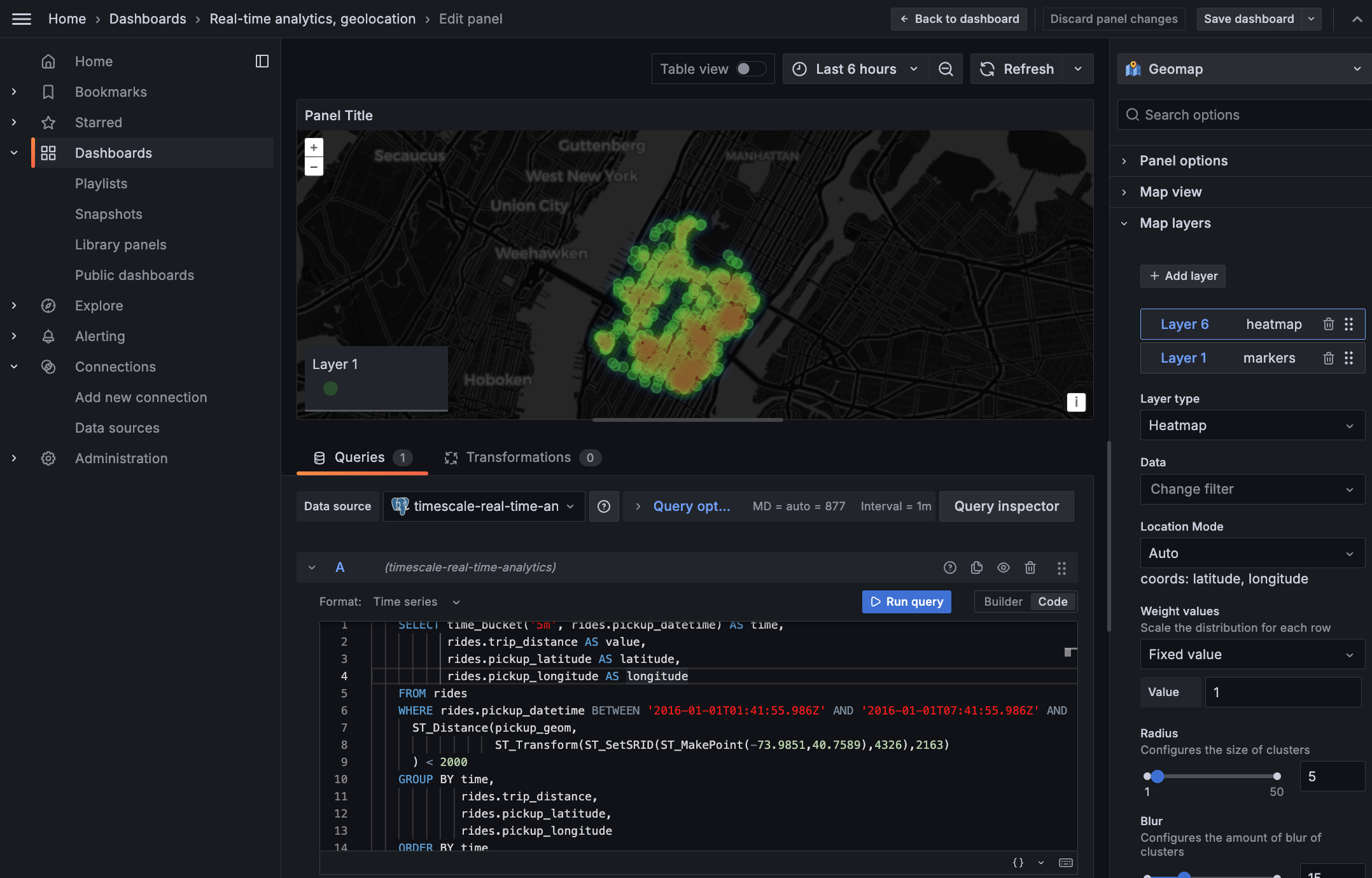

Visualize the area where you can make the most money

In this section you visualize a query that returns rides longer than 5 miles for trips taken within 2 km of Times Square. The data includes the distance travelled and isGROUP BY trip_distance and location so that Grafana can plot the data properly.

This enables you to see where a taxi driver is most likely to pick up a passenger who wants a longer ride,

and make more money.

-

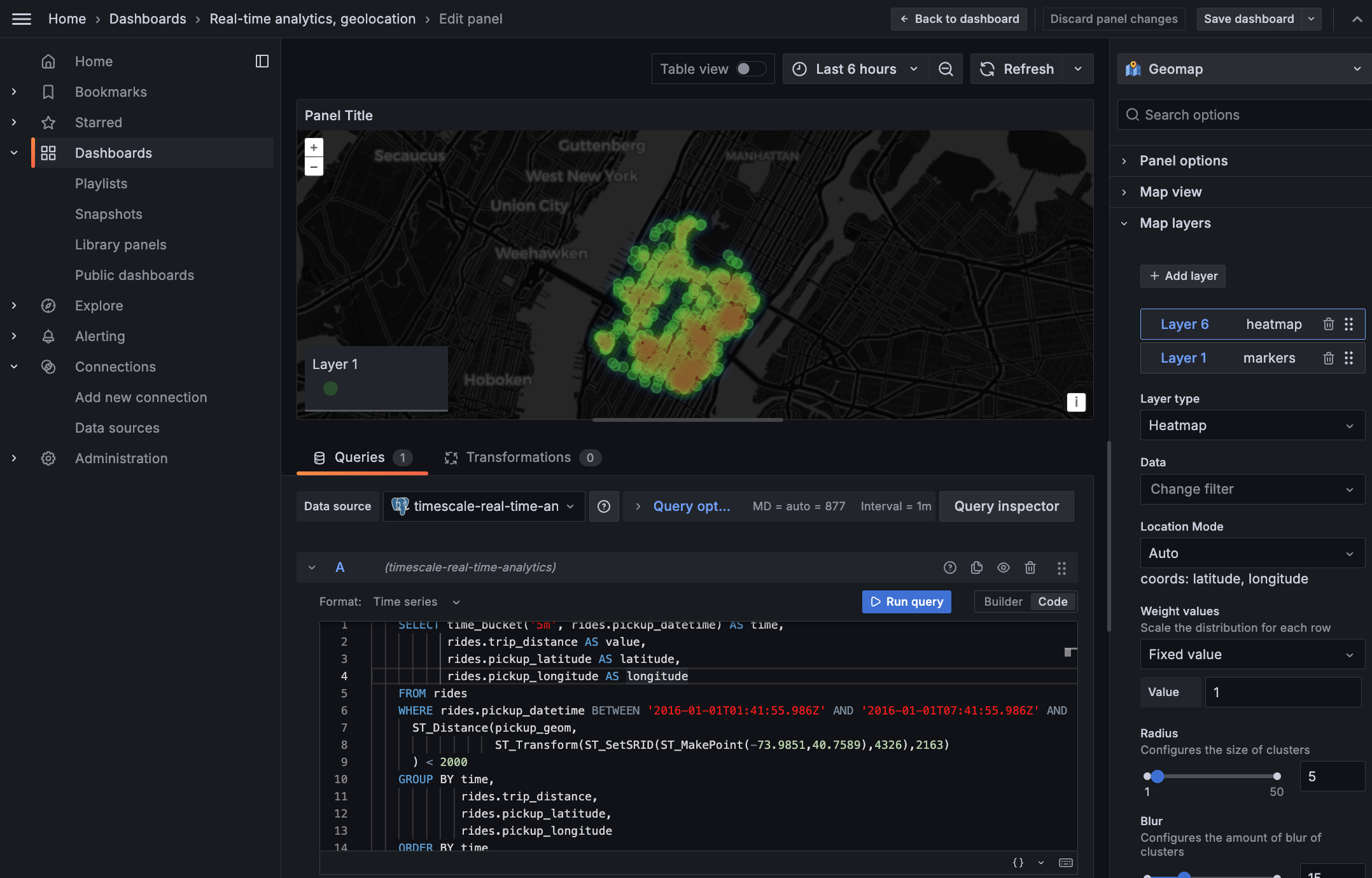

Create a geolocalization dashboard

- In Grafana, create a new dashboard that is connected to your data source with a Geomap visualization.

-

In the

Queriessection, selectCode, then select the Time seriesFormat.

-

To find rides longer than 5 miles in Manhattan, paste the following query:

You see a world map with a dot on New York.

- Zoom into your map to see the visualization clearly.

-

Customize the visualization

-

In the Geomap options, under

Map Layers, click+ Add layerand selectHeatmap. You now see the areas where a taxi driver is most likely to pick up a passenger who wants a longer ride, and make more money.

-

In the Geomap options, under